Consider the following problem. A NHS trust is devoted to improving their services to provide patients with the best possible experience. The trust sets up a Patient Feedback system in order to identify key areas of improvement. Specialized trust staff (the “coders”) read the feedback and decide what it is about. For example, if the feedback is “The doctor carefully listened to me and clearly explained me the possible solutions”, then the coders can safely conclude that the feedback is about communication.

But what happens when thousands and thousands of patient feedback records are populating the trust’s database every few days? Can the coders keep up with tagging such a high volume of records? After all, unless they read all of it, they cannot tag it!

We need to find a clever way to get some weight off the coders’ shoulders!

Here in Nottinghamshire Healthcare NHS Foundation Trust, we (the Data Science team) have opted for a Machine Learning approach to help coders tag the incoming patient feedback. In particular, we are developing Text Classification algorithms that “read” the feedback and decide what it is about.

First things first: what are Machine Learning and Text Classification?

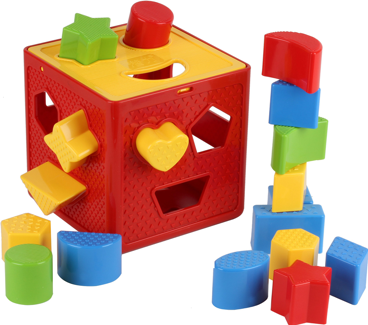

Machine Learning is a wider concept, but here we will talk about the so-called supervised Machine Learning. Say a child is playing with a hole cube:

By trying to pass different shapes through different holes, the child follows a process of “training” or “learning”, through which they learn to identify the right shape for each hole. Once they have been “trained”, they can easily predict what shape is the right one for a specific hole, on this or any other hole cube.

The process that the child has just followed is very similar to Machine Learning: see the child as an algorithm, the shapes as a dataset, and the holes as tags and you have a supervised Machine Learning problem. In other words, in supervised Machine Learning the algorithm “learns from” or “is trained on” the dataset, and thus becomes able to predict what tag corresponds to each record.

So when we have patient feedback data that have already been tagged by our coders, we can train an algorithm to assign the most appropriate tag to each feedback record, based on the content of the feedback text. As fresh, untagged feedback populates the trust’s database, the algorithm is then able to predict the most appropriate tag for it. In other words, the algorithm learns to automatically classify the text according its content, which is what Text Classification is about: a form of supervised Machine Learning that is about predicting the appropriate tag for the given text.

How can Text Classification improve NHS services?

Increase tagging speed. As mentioned earlier, the idea is to have the algorithm automatically tag feedback that the coders simply do not have time to read and tag themselves. To begin with, it will make the process of tagging much more efficient.

Narrow down searches for NHS staff. If a member of staff (e.g. manager, doctor, nurse) wishes to focus on improve patient experience that has to do with, e.g. communication, they will want to read some or all of the incoming feedback about it. The algorithm will crunch the incoming feedback, decide which records are about communication, and feed them back to the member of staff.

Are there any cons?

Algorithms make errors. For example, an algorithm may incorrectly classify feedback about smoking as being about communication. This is to be expected as no algorithm can ever be 100% accurate. What is key then is to make the algorithm as accurate as possible for the task at hand. This is an area where we focus on on a daily basis.

Despite some inaccuracies, Machine Learning will still offer the great advantage of narrowing down NHS staff searches almost exclusively to the feedback of interest. If a manager has 100 feedback records of which only 20 are potentially relevant, and the algorithm predicts that 30 are potentially relevant (because it will make a few mistakes), this would still be a 70% reduction in the number of feedback records to be read by the manager!